Scientific Session

Managing Motion and Artifacts

Session Topic: Managing Motion and Artifacts

Session Sub-Topic: Mind Your Head: Managing Motion in the Brain

Oral

Acquisition, Reconstruction & Analysis

| Tuesday Parallel 4 Live Q&A | Tuesday, 11 August 2020, 13:45 - 14:30 UTC | Moderators: Berkin Bilgic |

Session Number: O-56

|

0462. |

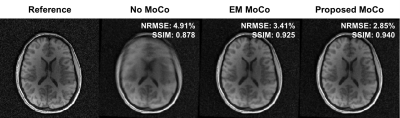

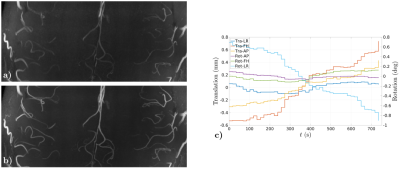

Motion-compensated 3D radial MRI using self-encoded FID navigators

Tess E. Wallace1,2, Davide Piccini3,4,5, Tobias Kober3,4,5, Simon K. Warfield1,2, and Onur Afacan1,2

1Computational Radiology Laboratory, Boston Children's Hospital, Boston, MA, United States, 2Department of Radiology, Harvard Medical School, Boston, MA, United States, 3Advanced Clinical Imaging Technology, Siemens Healthcare, Lausanne, Switzerland, 4Radiology, Lausanne University Hospital and University of Lausanne, Lausanne, Switzerland, 5LTS5, École Polytechnique Fédérale de Lausanne (EPFL), Lausanne, Switzerland

We propose a novel motion compensation strategy for 3D radial MRI that directly estimates rigid-body motion parameters from the central k-space signal, which acts as a self-encoded FID navigator. By modelling trajectory deviations as low-spatial-order field variations, motion parameters can be recovered using a model that predicts the impact of motion and field changes on the FID signal. The proposed method enabled robust compensation for deliberate head motion in volunteers, with position estimates and image quality equivalent to that obtained with electromagnetic tracking. Our approach is suitable for robust neuroanatomical imaging in subjects that exhibit patterns of large, frequent motion.

|

|

0463. |

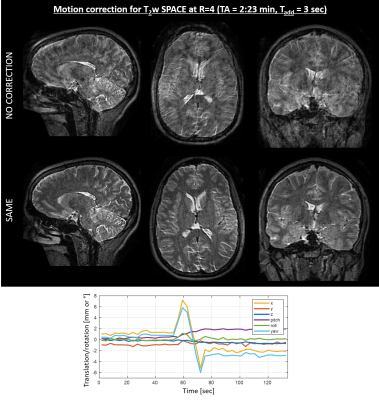

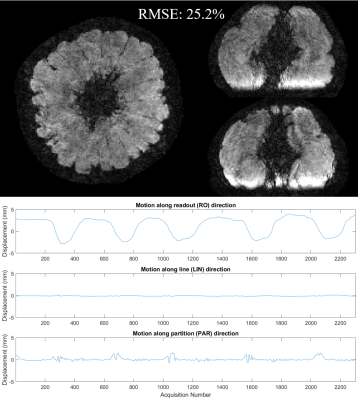

Scout Acquisition enables rapid Motion Estimation (SAME) for retrospective motion mitigation.

Daniel Polak1,2,3, Stephen Cauley2,4,5, Berkin Bilgic2,4,5, Daniel Nicolas Splitthoff3, Peter Bachert1, Lawrence L. Wald2,4,5, and Kawin Setsompop2,4,5

1Department of Physics and Astronomy, Heidelberg University, Heidelberg, Germany, 2Department of Radiology, A. A. Martinos Center for Biomedical Imaging, Charlestown, MA, United States, 3Siemens Healthcare GmbH, Erlangen, Germany, 4Department of Radiology, Harvard Medical School, Boston, MA, United States, 5Harvard-MIT Health Sciences and Technology, Massachusetts Institute of Technology, Cambridge, MA, United States

Navigation-free retrospective motion-correction typically requires estimating hundreds of coupled temporal motion parameters by solving a large non-linear inverse problem. This can be extremely demanding computationally, which has impeded implementation/adoption in clinical settings. We propose a technique that utilizes a single rapid scout scan (Tadd=3sec) to drastically reduce the computation cost of this motion-estimation and create a pathway for clinical acceptance. We optimized this scout along with the sequence acquisition reordering in a 3D Turbo-Spin-Echo acquisition. Our approach was evaluated in-vivo with up to R=6-fold acceleration and robust motion-mitigation was achieved using a scout with differing contrast to the imaging sequence.

|

0464. |

Measurement of head motion using a field camera in a 7T scanner

Laura Bortolotti1, Olivier Mougin1, and Richard Bowtell1

1Physics, Sir Peter Mansfield Imaging Centre, University of Nottingham, Nottingham, United Kingdom

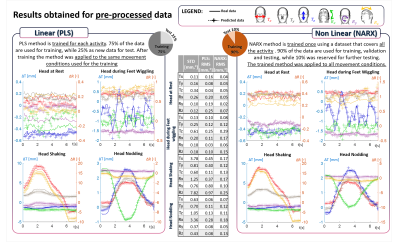

In this work, a step towards a non-contact motion correction technique has been made. Measurements of extra-cranial field perturbations made using a 16-channel magnetic field camera have been used to predict head motion parameters with good accuracy. The prediction was performed using both linear (PLS) and non-linear (NARX) methods. The number of field probes used for the prediction was reduced by performing Principal Component Analysis. Magnetic field data was also pre-processed to reduce the unwanted effect of chest movement in respiration. NARX outperformed the PLS approach producing good predictions of head position changes for a wider range of movements.

|

|

0465. |

MOCO-BUDA: motion-corrected blip-up/down acquisition with joint reconstruction for motion-robust and distortion-free diffusion MRI of brain

Xiaozhi Cao1,2,3, Congyu Liao2,3, Zijing Zhang2,4, Mary Kate Manhard2,3, Hongjian He1, Jianhui Zhong1, Berkin Bilgic2,3,5, and Kawin Setsompop2,3,5

1Center for Brain Imaging Science and Technology, Department of Biomedical Engineering, Zhejiang University, Hangzhou, China, 2Athinoula A. Martinos Center for Biomedical Imaging, Massachusetts General Hospital, charlestown, MA, United States, 3Department of Radiology, Harvard Medical School, charlestown, MA, United States, 4State Key Laboratory of Modern Optical Instrumentation, College of Optical Science and Engineering, Zhejiang University, Hangzhou, China, 5Harvard-MIT Department of Health Sciences and Technology, Cambridge, MA, United States

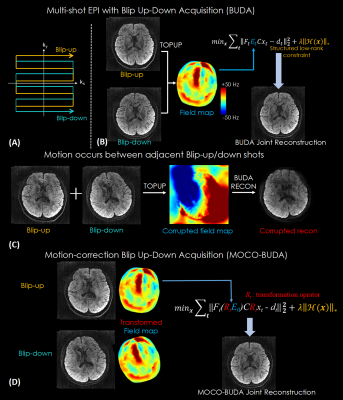

We proposed a motion-correction method for joint reconstruction of blip-up/down EPI acquisition (BUDA-EPI) of brain diffusion MRI. Motion parameters were estimated and incorporated into the joint parallel imaging reconstruction of the blip-up/down multi-shot data, which included B0 field maps and Hankel structured low-rank constraint. The proposed motion-corrected reconstruction approach was demonstrated in vivo to provide motion-robust reconstruction of blip-up/down multi-shot EPI diffusion data.

|

|

0466. |

Comparison of Prospective and Retrospective Motion Correction for 3D Structural Brain MRI

Jakob Slipsager1,2,3,4, Stefan Glimberg4, Liselotte Højgaard2, Rasmus Paulsen1, Andre van der Kouwe3,5, Oline Olesen1,4, and Robert Frost3,5

1DTU Compute, Technical University of Denmark, Kgs. Lyngby, Denmark, 2Department of Clinical Physiology, Nuclear Medicine & PET, Rigshospitalet, University of Copenhagen, Copenhagen, Denmark, 3Athinoula A. Martinos Center for Biomedical Imaging, Massachusetts General Hospital, Charlestown, MA, United States, 4TracInnovations, Ballerup, Denmark, 5Department of Radiology, Harvard Medical School, Boston, MA, United States

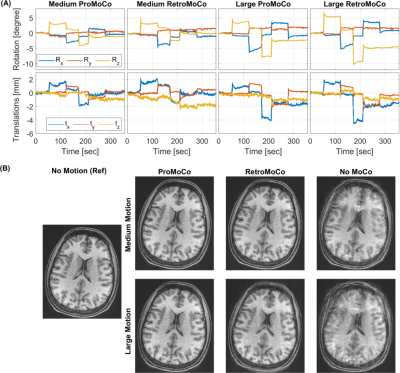

This work compares prospective and retrospective motion correction based on their capabilities to remove motion artifacts from 3D-encoded MPRAGE scans. Motion artifacts in clinical and research brain MRI are a major concern and the outcome of this problem includes repeated scans and the need for patient sedation or anesthesia, causing increased study time and cost. The prospective and retrospective correction approaches substantially improve the image quality of in-vivo scans for similar motion patterns. Prospective motion correction resulted in higher image quality than retrospective correction for larger discrete movements, and for periodic motion.

|

|

0467. |

Scan-specific assessment of vNav motion artifact mitigation in the HCP Aging study using reverse motion correction

Robert Frost1,2, M. Dylan Tisdall3, Malte Hoffmann1,2, Bruce Fischl1,2,4, David H. Salat1,2, and André J. W. van der Kouwe1,2

1Athinoula A. Martinos Center for Biomedical Imaging, Massachusetts General Hospital, Charlestown, MA, United States, 2Department of Radiology, Harvard Medical School, Boston, MA, United States, 3Department of Radiology, Perelman School of Medicine, University of Pennsylvania, Philadelphia, PA, United States, 4Computer Science and Artificial Intelligence Laboratory, Massachusetts Institute of Technology, Cambridge, MA, United States

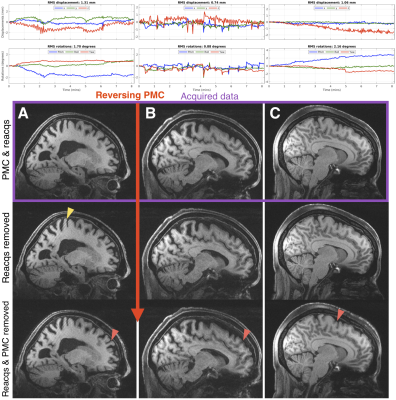

In studies that acquire a single prospectively-corrected scan it is unclear whether motion correction was beneficial when inspecting residual artifacts and the motion profiles. Here we used reverse motion correction to estimate images that would have resulted without vNav prospective motion correction (PMC). Matched motion tests were used to assess whether the reverse correction step was an accurate representation of images acquired during similar motion but without PMC. Using reverse motion correction on a subset of scans from the Human Connectome Project Aging study suggests that vNav PMC and selective reacquisition substantially improved image quality when there was motion.

|

|

0468. |

Preserved high resolution brain MRI by data-driven DISORDER motion correction

Lucilio Cordero-Grande1, Raphael Tomi-Tricot2, Giulio Ferrazzi3, Jan Sedlacik1, Shaihan Malik1, and Joseph V Hajnal1

1Centre for the Developing Brain and Biomedical Engineering Department, School of Biomedical Engineering and Imaging Sciences, King's College London, London, United Kingdom, 2Centre for the Developing Brain and Biomedical Engineering Department, School of Biomedical Engineering and Imaging Sciences / MR Research Collaborations, King's College London / Siemens Healthcare Limited, London / Frimley, United Kingdom, 3Biomedical Engineering Department, School of Biomedical Engineering and Imaging Sciences, King's College London, London, United Kingdom

Retrospective motion correction is applied for preserved image resolution on ultra-high field volumetric in-vivo brain MRI. Correction is based on the synergistic combination of appropriate view reorderings for increasing the sensitivity to motion and aligned reconstructions for deconvolving the effect of motion. Resolution loss introduced by motion is reverted without resorting to external motion tracking systems, navigators or training data. Contrast and sharpness improvements are shown on high resolution flow and susceptibility sensitive T1- and T2*-weighted spoiled gradient echo sequences acquired on cooperative volunteers.

|

|

0469. |

Motion estimation and correction with joint optimization for wave-CAIPI acquisition

Zhe Wu1 and Kâmil Uludağ1,2,3

1Techna Institute, University Health Network, Toronto, ON, Canada, 2Koerner Scientist in MR Imaging, University Health Network, Toronto, ON, Canada, 3Center for Neuroscience Imaging Research, Institute for Basic Science & Department of Biomedical Engineering, Sungkyunkwan University, Suwon, Korea, Republic of

Wave-CAIPI is a recently introduced parallel imaging method with high reduction factor and low g-factor penalty, thus is less prone to motion for the patients who cannot hold steady for long time. This study revealed that wave-CAIPI is still sensitive to during-scan motion, and proposed a joint optimization method to estimate motion and mitigate the introduced artifacts in wave-CAIPI images.

|

|

0470. |

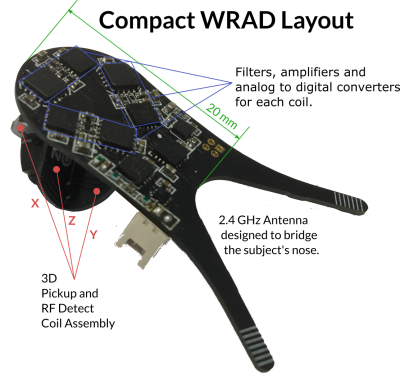

A method for controlling wireless hardware using the pulse sequence, applications in prospective motion correction.

Adam M. J. van Niekerk1, Tim Sprenger2,3, Henric Rydén1,2, Enrico Avventi1,2, Ola Norbeck1,2, and Stefan Skare1,2

1Clinical Neuroscience, Karolinska Intitutet, Stockholm, Sweden, 2Neuroradiology, Karolinska University Hospital, Stockholm, Sweden, 3MR Applied Science Laboratory Europe, GE Healthcare, Stockholm, Sweden

We explore a real-time method of controlling a wireless device using the pulse sequence - with a series of short RF pulses. We show that it is possible to encode and detect eight unique identifiers with a high reliability in 52 μs. Some identifiers are followed by short (< 1 ms) navigators that encode the pose of the device in the imaging volume. Other identifiers are followed by more RF pulses that encode information used for device configuration. These tools minimise the impact on the main pulse sequence and allow the device to tailor feedback precision to the pulse sequence requirements.

|

|

0471. |

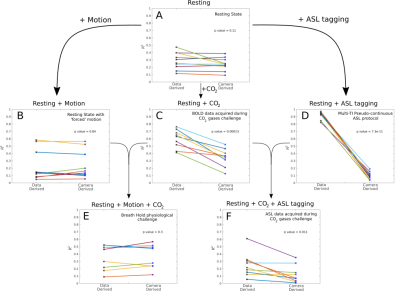

Correcting motion registration errors caused by global intensity changes during CVR and CBF measurements.

Ryan Beckerleg1, Joseph Whittaker1, Daniel Gallichan2, and Kevin Murphy1

1CUBRIC, School of Physics and Astronomy, Cardiff University, Cardiff, United Kingdom, 2CUBRIC, School of Engineering, Cardiff University, Cardiff, United Kingdom

Motion correction is an important preprocessing step in fMRI research1. Motion artefacts not only affect image quality but can lead to erroneous results which are normally corrected using a volume registration algorithm (VRA). Here we demonstrate that when global intensity changes are present in the data (e.g., caused by a CO2 challenge during measurement of cerebrovascular reactivity (CVR) or by ASL tagging), the VRA misinterprets such intensity changes as motion. We compare the motion derived from the VRA with motion parameters derived from an external optical tracking system to determine the extent of the problem.

|

Back to Program-at-a-Glance

Back to Program-at-a-Glance Watch the Video

Watch the Video Back to Top

Back to Top