Alix Plumley1, Luke Watkins1, Kevin Murphy1, and Emre Kopanoglu1

1Cardiff University Brain Research Imaging Centre, Cardiff, United Kingdom

1Cardiff University Brain Research Imaging Centre, Cardiff, United Kingdom

We present a deep learning approach to estimate motion-resolved parallel-transmit B1+ distributions using a system of conditional generative adversarial networks. The estimated maps can be used for real-time pulse re-design, eliminating motion-related flip-angle error.

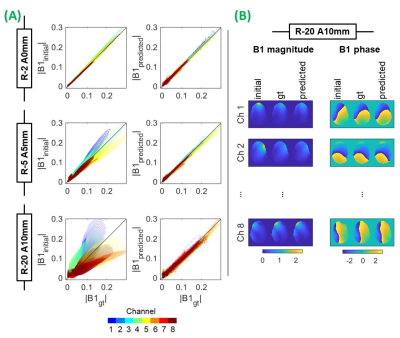

Fig.4(A) Voxelwise magnitude

correlations between B1initial (initial position) and B1gt (ground-truth

displaced) [left] and B1predicted (network predicted displaced) and

B1gt [right]. Small (top), medium (middle) and large (bottom) displacements are shown. Phase data not shown. (B) Magnitude

(in a.u.) and phase (radians) B1-maps for the largest displacement.

Left, middle and right: B1initial, B1gt and

B1predicted, respectively. B1predicted

quality did not depend heavily on displacement magnitude and remained high

despite 5 network cascades for evaluation at R-20 A10mm.

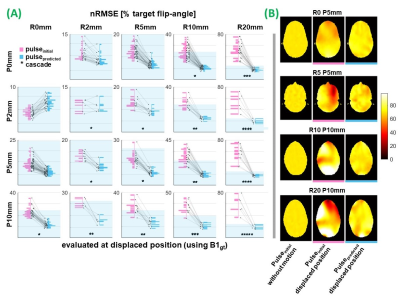

Fig.3(A) Motion-induced

flip-angle error for pTx pulses designed using B1initial

(conventional method, pink) & B1predicted (proposed method,

blue) at all evaluated positions & slices. Y-axes show nRMSE (% target

flip-angle). nRMSE of all pulsespredicted is at/below the shaded

region. Asterisks show number of network cascades required for evaluation. (B) Example profiles. Left: evaluation for pulseinitial

without motion. Middle & right: evaluations at

B1gt (displaced position) using pulseinitial & pulsepredicted,

respectively. Colorscale shows flip-angle (target=70°).